Kubernetes Ingress & TLS hosts explained

In recent years, Kubernetes has soared in popularity to become one of the most renowned open-source container management systems in the world. Its extensive feature set and comprehensive automation tools allow users to streamline their workflow by eliminating many of the manual tasks involved in deploying, scaling, and managing containerized applications.

When developing applications within a Kubernetes cluster, there are times when you need to expose your application to traffic from outside the cluster. By default, external connections are only routed if they originate from within the cluster network itself.

There are several methods to overcome this limitation, each with its own set of rules and outcomes. One effective solution is using Ingress, which can be utilized in conjunction with the Kubernetes API to govern how external users access the associated services in a Kubernetes cluster.

What is Kubernetes Ingress?

Ingress is an API object that manages external access to services within a Kubernetes cluster, typically HTTP. Ingress can provide load balancing, SSL termination, and name-based virtual hosting. It allows you to define rules for routing traffic to different services based on the requested host or path.

By configuring Ingress, you can expose multiple services under a single IP address, which simplifies traffic management and improves resource utilization.

How Kubernetes Ingress Works

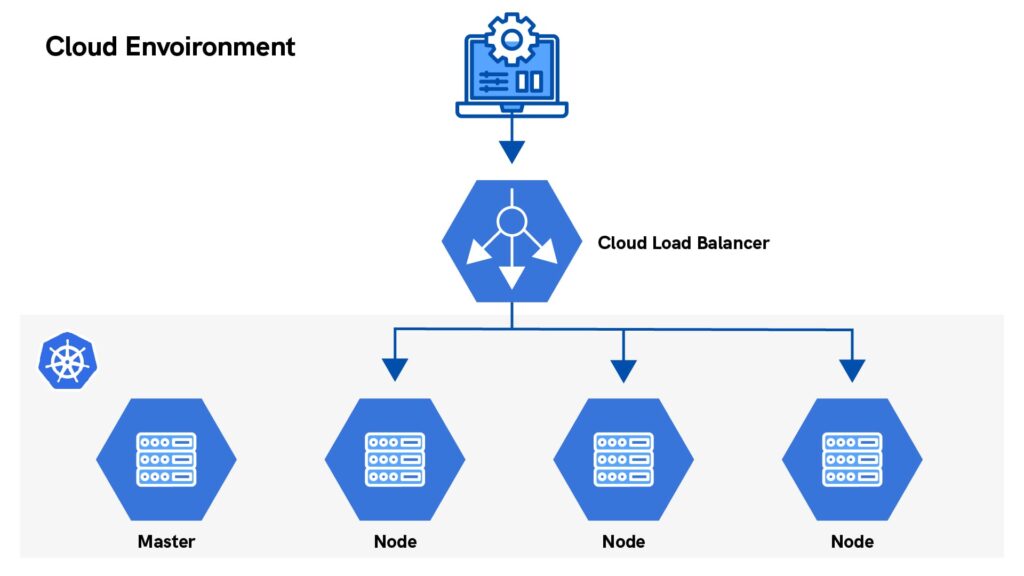

- Ingress Controller: An Ingress Controller is a specialized load balancer for Kubernetes environments. It watches the Kubernetes API server for updates to the Ingress resource and configures the load balancer based on the Ingress rules.

- Ingress Resources: These are a set of rules that define how incoming traffic should be routed to the services within the cluster. Ingress resources can specify multiple hostnames and paths, making it a powerful tool for directing traffic to different microservices.

- Load Balancing: Ingress allows for sophisticated load balancing strategies, distributing incoming requests across multiple backend services to ensure high availability and reliability.

What is TLS and How It Works with Ingress

Transport Layer Security (TLS) is a cryptographic protocol designed to provide secure communication over a computer network. Ingress can manage TLS certificates to ensure that traffic between clients and the Ingress endpoint is encrypted, enhancing security.

- TLS Termination: Ingress can terminate SSL/TLS at the load balancer level, offloading the encryption/decryption work from your backend services. This can simplify certificate management and improve performance.

- Secret Objects: TLS certificates are stored in Kubernetes as Secret objects. You can create a Secret containing your TLS certificate and key, and reference it in your Ingress resource.

- Configuration: To enable TLS, you define the TLS section in your Ingress resource, specifying the hosts and the Secret that contains the TLS certificate. When a request is made to an HTTPS endpoint, the Ingress controller uses the specified certificate to establish a secure connection.

By leveraging Ingress with TLS, you ensure secure, efficient routing of external traffic to your Kubernetes services, enhancing both performance and security.

For further details, explore the Kubernetes API documentation to understand the intricate functionalities and capabilities of Kubernetes Ingress and TLS hosts.

What is Kubernetes Ingress?

Kubernetes Ingress is essentially an assortment of routing rules that determine how users from outside the Kubernetes cluster can access applications running within the cluster.

Understanding Kubernetes Ingress

Ingress is an API object that uses rules defined in an Ingress Resource to determine where external HTTP and HTTPS traffic should be routed within the cluster. By doing so, it eliminates the need for multiple load balancers or configuring individual nodes for external access, routing traffic for multiple HTTP/HTTPS services through a single external IP address.

The second part that makes up Ingress, along with the API object, is the Ingress Controller. An Ingress Controller is essential as it is the physical implementation of the Ingress concept. In other words, the Ingress Controller is necessary for routing traffic that meets rules defined by the Ingress Resource.

Production environments can benefit greatly from the use of Ingress. In such environments, you’ll typically need support for multiple protocols, authentication, and content-based routing. Ingress accommodates this by allowing you to configure and manage all of these within the cluster.

What is the Ingress Controller?

An Ingress Controller is a fundamental component of how Ingress works. Ingress Controllers are responsible for interpreting rules defined in the Ingress Resource and using this information to process external traffic. This traffic is then sent along the correct routes to the relevant application within the cluster.

In practice, an Ingress Controller is an application running in a Kubernetes cluster that configures an HTTP load balancer according to Ingress Resources. Ingress cannot function with only an Ingress Resource; it requires the Ingress Controller to physically carry out this process.

Ingress Controllers can be used with different load balancers, such as software load balancers or external hardware load balancers. Each load balancer requires a different implementation of the Ingress Controller, depending on the use case.

By leveraging Kubernetes Ingress and Ingress Controllers, you can efficiently manage external access to your Kubernetes applications, ensuring streamlined traffic routing and enhanced performance.

If the Ingress concept can be thought of as determining what you want to do with external traffic bound to the Kubernetes cluster, then the Ingress Controller would be the implementation of how this is handled.

Next, we’re going to be looking at the Ingress Resource.

The Ingress Resource in Kubernetes

So we’ve determined that the Ingress Resource is a collection of rules that define how external traffic is routed within the Kubernetes cluster. But what does one actually look like?

Example of an Ingress Resource

Here’s a minimal example of an Ingress Resource:

yamlCopy codeapiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: test-ingress

spec:

rules:

- http:

paths:

- path: /testpath

backend:

serviceName: test

servicePort: 80

Let’s break down each aspect of the Resource line by line to better understand what it means.

- Lines 1-4: These fields are standard with any Kubernetes configuration files.

apiVersion,kind, andmetadataare self-explanatory. - Lines 5-7: The

specfield contains information necessary to configure a proxy server or load balancer. The key function here is a list of rules that are matched against all incoming requests – in this example, only HTTP rules are supported. - Lines 8-9: Each HTTP rule is provided with the following information:

- A

host(e.g., foo.bar.com, defaults to * in this example) - A list of

paths(e.g., /testpath in this example)

- A

- Lines 10-12: For each path, a

backendis defined byserviceNameandservice.port.nameorservice.port.numberfields. Ingress traffic is usually sent directly to endpoints that match a backend.

How the Ingress Resource is Processed

As the external traffic reaches the load balancer, it’s processed by the Ingress Controller like so:

- If the traffic matches the supported rules, the rules are applied to the provided host. If no host is provided, then the rules apply to all inbound HTTP traffic.

- If the host and the path match the content of an incoming request, then the load balancer directs the traffic to the Service that’s referenced.

- Finally, traffic matching all rule criteria is sent to the backend, defined by the service and port names/numbers.

Ingress with no rules will send all traffic to a single default backend, which is typically a configuration option of the Ingress Controller and not specified in the Resource itself.

For a more detailed explanation, you can check out the Kubernetes Ingress documentation.

By understanding the Ingress Resource and how it operates within Kubernetes, you can effectively manage external traffic, ensuring your applications are accessible and performant. hosts or paths match the HTTP request, then the traffic is also routed to the default backend.

TLS in Kubernetes Ingress

With security being a paramount concern for any business today, encrypting external traffic routed to applications within a Kubernetes cluster is essential. This can be achieved by using TLS certificates in the load balancer.

The two fundamental components required for the load balancer to complete HTTPS handshakes are the certificate and the private key. Without both, external traffic cannot be encrypted.

When configuring Ingress for TLS encryption, the method used will depend on the type of Ingress Controller being implemented. However, the general concepts remain consistent across different implementations.

How TLS in Ingress Works

When the load balancer accepts HTTPS connections, the traffic between the client and the server is encrypted using TLS. This traffic is then routed to applications on the backend of the cluster, determined by metadata included in the requests.

By using a single certificate, you can secure all services in the Kubernetes cluster. However, in a production environment, you may want to use a different certificate for each service. Ingress accommodates this by allowing multiple certificates.

Once traffic reaches the load balancer, it utilizes Server Name Indication (SNI) to determine which TLS certificate to provide to the client, based on the domain name in the TLS handshake. Traffic is then sent to the relevant backend, depending on the domain name defined in the request.

If the client doesn’t use SNI or uses a domain name not matching a Common Name (CN) in one of the TLS certificates, the load balancer uses the first certificate listed in Ingress.

For more detailed guidance on implementing multi-SSL configurations in Ingress, refer to the Google Cloud Kubernetes Engine documentation.

By incorporating TLS with Ingress, you can enhance the security of your Kubernetes applications, ensuring encrypted communications and protecting sensitive data from potential threats.

In Conclusion

We hope this guide has provided you with a comprehensive understanding of how Kubernetes Ingress works and the concept of multiple TLS hosts within a cluster. By leveraging Kubernetes Ingress and TLS, you can significantly enhance the security and efficiency of your containerized applications.

At UKHost4u, we offer a variety of plans to help you set up your Kubernetes host network effortlessly. Our Kubernetes hosting plans are designed to provide robust security, scalability, and performance for your applications. Visit our website today to see how a Kubernetes hosting plan can benefit your applications!

If you have any questions or need further assistance, don’t hesitate to contact us. Our dedicated support team is always ready to help.

Contact Us:

- Live Chat: Available Monday to Friday, 9am to 5pm on our website.

- Support Portal: Access our support portal.

- Telephone: Call us at 0330 088 5790 available Monday to Friday, 9am to 5pm.

Discover the benefits of Kubernetes hosting with UKHost4u and elevate your applications to the next level.