Kubernetes vs. Docker – Which Should I Use?

Using containers to manage applications has become an increasingly attractive choice for developers, offering scalability, flexibility, and efficiency. However, with so many options available, developers often find themselves asking: Kubernetes vs. Docker – which should I use?

When diving into the world of container management systems, it’s not uncommon to come across the ‘Kubernetes vs. Docker’ debate. However, this comparison can be somewhat misleading and requires a closer look to truly understand the roles each of these tools plays.

In reality, Kubernetes and Docker aren’t direct competitors; they complement each other in the containerization ecosystem. The two platforms are built around different concepts to provide solutions to distinct challenges.

Essentially, Docker is a containerization platform that allows you to create, deploy, and run applications in containers. On the other hand, Kubernetes is a container orchestrator that manages container platforms like Docker. Because both focus on containerization, there is often confusion about how they differ and when to use one over the other.

In this blog post, we aim to clear up any confusion between Kubernetes and Docker by explaining how each works and how they compare against each other, helping you make an informed decision based on your specific needs.

Kubernetes vs. Docker: The Benefits of Using Containers

Kubernetes vs. Docker: The Benefits of Using Containers

Both Kubernetes and Docker are built around the concept of running applications in containers. But before diving into the details of each platform, it’s essential to grasp the purpose of containers and the advantages they offer.

Key Benefits of Containers:

- Reduced Overhead: Unlike traditional virtual machines (VMs), containers are lightweight because they don’t require a full operating system. This leads to a significant reduction in resource consumption, making containers more efficient and cost-effective.

- Portability: Containers allow applications to be deployed consistently across various environments, whether on different operating systems or hardware platforms. This portability ensures that applications run smoothly regardless of where they are deployed, reducing the infamous “it works on my machine” problem.

- Isolation: Containers encapsulate an application and its dependencies, creating an isolated environment. This isolation ensures that if one application fails, it doesn’t affect other applications running on the same host, leading to more stable and reliable deployments.

- Simplified Management: Operations such as creating, destroying, and replicating containers are straightforward and fast. This simplicity accelerates the development process, allowing teams to focus more on coding and less on managing infrastructure.

By understanding these benefits, you can better appreciate the roles of Kubernetes and Docker in managing containerized applications, helping you decide which tool is the best fit for your needs.

What is Docker?

Docker is an open-source platform that leverages operating system virtualization to deploy software in lightweight, portable packages known as containers. Along with deploying these containers, Docker provides a suite of tools that simplify the process of developing, shipping, and running applications within these containers.

Containers are inherently lightweight because they do not require hypervisors; instead, they run directly on the host machine’s kernel. Despite each container being isolated with its own software, libraries, and configuration files, they maintain the ability to communicate with one another. This isolation and communication architecture make Docker a powerful platform for developers, significantly simplifying and streamlining the entire application development and deployment process.

One of Docker’s key features is its use of images—essentially snapshots of a container at a specific point in time. These images ensure that applications can run on any Linux machine, regardless of any customized settings, making Docker an incredibly versatile tool. Docker images can be easily shared over the internet via Docker Hub, an online repository where users can download pre-built Docker images created by the community or upload their own for others to use.

How Docker Works

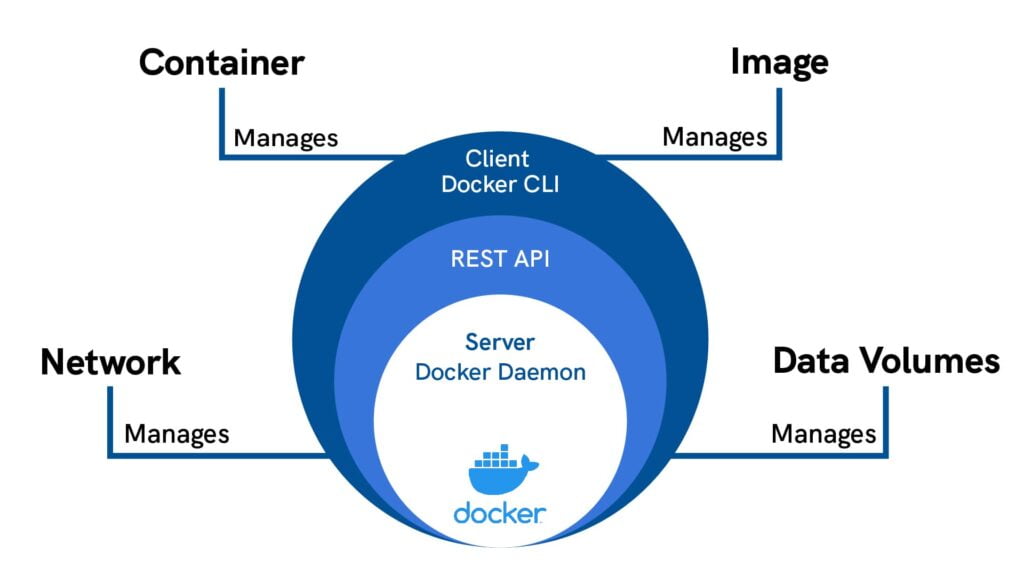

When talking about Docker, most people are referring to the Docker Engine, the client-server application that facilitates the creation and management of Docker containers.

Components of Docker Engine:

- Server (Daemon Process): This is the long-running program (dockerd) that manages Docker objects such as containers, images, networks, and volumes.

- REST API: The REST API defines the interfaces through which programs can communicate with the daemon process, enabling control over Docker services.

- CLI Client: The command-line interface (the

dockercommand) interacts with the daemon process via the REST API, allowing users to create, run, and manage containers directly from the terminal.

Docker’s streamlined approach to containerization, coupled with its powerful tools and community-driven ecosystem, makes it an invaluable resource for developers looking to create, test, and deploy applications quickly and efficiently.

For more detailed information on how Docker works, you can refer to the Docker Engine documentation, explore the extensive image library on Docker Hub, or visit Docker’s official website.

In practice, the CLI uses the REST API to control the daemon process through direct CLI commands or scripting.

The daemon process then creates and manages the relevant Docker objects, like containers, images, networks, and volumes.

What Docker Does Well

Docker excels in providing a streamlined approach to packaging and configuring software applications within portable containers. This containerization system simplifies the development and deployment of complex software applications by bundling everything needed to run the application—such as code, libraries, and configurations—into a single, portable unit.

One of Docker’s greatest strengths lies in its ability to combine multiple containers to create sophisticated, multi-tiered systems with ease. For instance, if you’re building a system that requires a database server, an application server, and a web server to operate concurrently, Docker allows you to deploy pre-configured images of each component, drastically reducing the time and effort needed to set everything up.

Another advantage of Docker is its ability to handle diverse software environments. If different servers in your system require varying versions of specific libraries or dependencies, Docker’s containerization ensures that each environment is isolated, preventing conflicts and maintaining system stability.

Moreover, Docker’s container system enhances security by isolating each application. In the event of a security breach, the compromised container remains isolated from the others, reducing the risk of widespread damage and effectively containing threats.

What Docker Doesn’t Do Well

While Docker has numerous advantages, it also has some limitations that developers should consider.

Performance: One of the primary drawbacks of Docker is the performance hit associated with any virtualized environment. Although Docker is more lightweight compared to traditional virtual machines, running containers alongside applications hosted directly on physical hardware usually results in a slight performance decrease. While this performance difference is often minimal and acceptable for most applications, it may be a concern for applications where latency and speed are critical. If your application demands the highest possible performance, Docker might not be the ideal choice.

Simultaneous Services: Docker is not always the best solution for applications requiring multiple services to run on the same machine simultaneously. Docker’s design focuses on containerization and isolation, which can complicate scenarios where two services need to share the same environment closely. In such cases, alternative solutions may offer better compatibility and performance.

Understanding these strengths and limitations will help you make an informed decision about whether Docker is the right tool for your development and deployment needs.

What is Kubernetes?

Kubernetes, often abbreviated as K8s, is an open-source container orchestration system designed to automate the deployment, scaling, and management of containerized applications. Originally developed by Google, Kubernetes has become the industry standard for managing containers in production environments due to its robust automation capabilities and scalability.

In simple terms, Kubernetes simplifies the coordination and operation of applications running within containers across a cluster of machines. This orchestration ensures that applications remain online, secure, and running efficiently, even as demand fluctuates.

Kubernetes is not a standalone alternative to container platforms like Docker; instead, it complements them by providing the orchestration layer necessary to manage containers at scale. This synergy is why Kubernetes is often used alongside Docker and other containerization tools.

How Kubernetes Works

Kubernetes operates by connecting multiple physical or virtual machines into a unified cluster, creating a shared network that facilitates communication between these machines. This cluster becomes the foundation where all Kubernetes components, workloads, and capabilities are managed.

To grasp the workings of Kubernetes, it’s essential to understand two fundamental concepts:

- Node: A node is a single machine, either virtual or physical, that Kubernetes manages. Nodes are the workhorses of the Kubernetes cluster, running the applications and managing the resources allocated to them.

- Pod: A pod is the smallest deployable unit in Kubernetes, consisting of one or more containers that need to run together. For instance, an application might require both a web server and a caching server to function; Kubernetes would encapsulate these containers within a single pod, ensuring they operate in tandem.

Kubernetes’ architecture can be broken down into two core components:

- Master Node: The Master node is the command center of the Kubernetes cluster. It serves as the primary point of contact and is responsible for managing the cluster’s overall operations. The Master node coordinates all activities, handles communication between different components, and provides an API for users and clients to interact with the cluster.

- Worker Nodes: Worker nodes execute the workloads assigned by the Master node. These nodes run the containers, manage local and external resources, and adjust network configurations to ensure traffic is routed correctly within the cluster. The worker nodes are where the actual applications live and run, performing the tasks necessary to keep your services operational.

By automating the orchestration of containers, Kubernetes allows businesses to manage complex applications with ease, ensuring high availability, scalability, and efficiency.

Kubernetes Nodes and Their Role

Each working node in a Kubernetes cluster is managed by a component called a Kubelet. The Kubelet acts as an agent that oversees the node, ensuring that all containers within the node are running as expected and communicating effectively with the master node. Additionally, these nodes typically come equipped with tools for managing container operations, such as containerd or Docker, which handle the lifecycle of the containers themselves.

Communication between the master node and the worker nodes is facilitated by the Kubernetes API. This powerful API is the backbone of Kubernetes, allowing users to query, manage, and manipulate objects within the Kubernetes architecture, including pods, namespaces, and events. The API ensures seamless coordination across the cluster, enabling the efficient management of containerized applications.

What Kubernetes Does Well

Kubernetes shines in production environments, where its advanced automation capabilities can significantly streamline daily operations. One of its standout features is self-healing—a capability that automatically replaces unresponsive or failed containers, minimizing the risk of downtime and ensuring your applications remain available.

Scalability is another area where Kubernetes excels. With horizontal auto-scaling, Kubernetes can automatically adjust to spikes in resource demand by spinning up new containers as needed. For instance, if your application experiences a sudden surge in traffic, Kubernetes can deploy additional containers to manage the increased load, ensuring consistent performance.

Kubernetes also simplifies the deployment process, especially when rolling out updates. When deploying a new version of your application, Kubernetes can run the new version alongside the existing one, ensuring the update works correctly before phasing out the old version. This approach minimizes downtime and reduces the risk of deployment errors.

These features, among others, make Kubernetes an invaluable tool for managing complex, large-scale applications in dynamic environments.

What Kubernetes Doesn’t Do Well

Despite its many advantages, Kubernetes is not without its challenges. One of the most significant drawbacks is its complexity. Kubernetes is a powerful tool, but harnessing its full potential requires a deep understanding of its components and operations. The learning curve can be steep, often requiring significant time and effort to master.

The complexity of Kubernetes extends beyond initial setup. Ongoing management involves using specialized administrative tools like kubectl, which may require additional training for your team. This can lead to a temporary dip in productivity as your team adapts to the new workflow.

Transitioning to Kubernetes from another platform can also be cumbersome. Applications may need to be reconfigured or adapted to run smoothly on Kubernetes, and existing processes might require modification. The level of effort needed varies depending on the application but can be substantial, particularly for complex systems.

Finally, Kubernetes might be overkill for simpler applications. If your project is straightforward, with limited resource requirements and a small user base, Kubernetes may not offer enough benefits to justify its use. In such cases, the additional cost and complexity might outweigh the advantages, making other, simpler solutions more suitable.

Here’s the improved conclusion with the added links and support information:

In Conclusion

Now that we’ve provided an overview of Kubernetes and Docker, their functionalities, and their respective advantages and disadvantages, you should have a clearer idea of which platform best suits your needs.

As we’ve explained, Kubernetes and Docker are not direct competitors but are instead complementary tools that work well together. Kubernetes is often used in conjunction with Docker to maximize efficiency. Docker offers an exceptional containerization system for running your applications, while Kubernetes provides powerful tools for managing these containers at scale. Depending on the application you’re developing, utilizing both platforms might be your best strategy.

At UKHost4u, we offer a variety of plans that are perfect for launching a Docker deployment or managing a Kubernetes cluster. No matter what type of application you’re developing, our tools will help you streamline and enhance every step of the development cycle. Explore our Docker Hosting Plans and Kubernetes Hosting Plans to find the best solution for your needs.

If you have any questions or need further assistance, feel free to contact our support team at any time.